RoadhammerGaming

Newbie

Hello, I'm trying to implement a simple bandpass filter in my project, but can't seem to read the output data.

Notes: I'm using android studio and currently I'm using the latest tarsos audio library that is supposed to be compatible with android, and in fact have successfully added the library to my android studio project. I previously tried using the JTransforms and the Minim libraries with no luck. EDITED 8/23/17: found and fixed some bugs, reposted current code, still made no progress with the actual problem summarized below:

Summary: in the 5th code block I have posted, on line 15 that is commented out, I need to know how to get that line to work, as I can't get the data that needs to be read

What I'm trying to do is record from the microphone, and while recording use the dsp BandPass filter from the tarsos library and output the results to a .wav file. I can successfully stream the microphone to a .wav file following this tutorial by using the android.media imports, but that doesn't allow me to add the BandPass filter, and using the tarsos imports functions don't allow me to use the save as .wav methods that that tutorial has, I know I'm missing something and/or doing something wrong, but I've been googling this for almost a week and haven't found a working solution, I've only found links to the java files that are inside the library which isn't helpful as I couldn't find tutorials on how to correctly use them. What am I doing wrong? Here is the relevant code for the tarsos method I'm trying to use:

the related imports and 'global' variables

This starts the mic recording inside an onClick method, and by commenting/uncommenting one of the 2 'running' variable values I can switch between filter or no filter (android or tarsos functions) when the startRecording method is called

The start recording method:

Stop recording button inside an onClick method

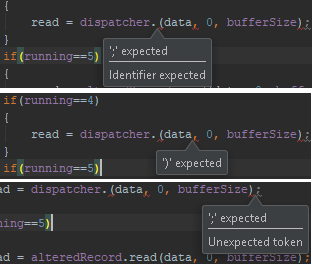

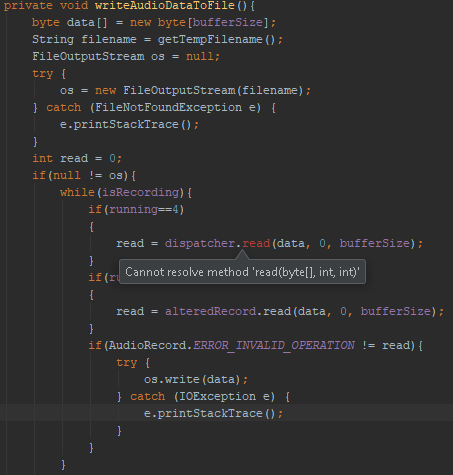

Both cases are fine until this point, if running==4 (tarsos dsp filter applied) the program won't compile unless the data read line is commented out. if I use running==5 (the android.media way with no filter) the whole rest of this works fine and saves the file, but no BandPass effect applied. if I try swapping out the alteredRecord = new AudioRecord... with the tarsos dispatcher = AudioDispatcherFactory... ( such as dispatcher = new AudioRecord...) they are incompatible and will not even think of compiling. (That's why line 15 in the following method is commented out)

Notes: I'm using android studio and currently I'm using the latest tarsos audio library that is supposed to be compatible with android, and in fact have successfully added the library to my android studio project. I previously tried using the JTransforms and the Minim libraries with no luck. EDITED 8/23/17: found and fixed some bugs, reposted current code, still made no progress with the actual problem summarized below:

Summary: in the 5th code block I have posted, on line 15 that is commented out, I need to know how to get that line to work, as I can't get the data that needs to be read

What I'm trying to do is record from the microphone, and while recording use the dsp BandPass filter from the tarsos library and output the results to a .wav file. I can successfully stream the microphone to a .wav file following this tutorial by using the android.media imports, but that doesn't allow me to add the BandPass filter, and using the tarsos imports functions don't allow me to use the save as .wav methods that that tutorial has, I know I'm missing something and/or doing something wrong, but I've been googling this for almost a week and haven't found a working solution, I've only found links to the java files that are inside the library which isn't helpful as I couldn't find tutorials on how to correctly use them. What am I doing wrong? Here is the relevant code for the tarsos method I'm trying to use:

the related imports and 'global' variables

Java:

import android.media.AudioRecord;

import android.media.MediaRecorder;

import android.media.AudioFormat;

import android.media.AudioTrack;

import be.tarsos.dsp.AudioDispatcher;

import be.tarsos.dsp.AudioProcessor;

import be.tarsos.dsp.filters.BandPass;

import be.tarsos.dsp.io.android.AudioDispatcherFactory;

//start the class

AudioRecord alteredRecord = null;

AudioDispatcher dispatcher;

float freqChange;

float tollerance;

private static final int RECORDER_BPP = 16;

private static final String AUDIO_RECORDER_FOLDER = "Crowd_Speech";

private static final String AUDIO_RECORDER_TEMP_FILE = "record_temp.raw";

private static final int RECORDER_SAMPLERATE = 44100;

private static final int RECORDER_CHANNELS = AudioFormat.CHANNEL_IN_MONO;

private static final int RECORDER_AUDIO_ENCODING = AudioFormat.ENCODING_PCM_16BIT;

private int bufferSize = 1024;

private Thread recordingThread = null;

//set the min buffer size in onCreate event

bufferSize = AudioRecord.getMinBufferSize(RECORDER_SAMPLERATE,

RECORDER_CHANNELS, RECORDER_AUDIO_ENCODING)*4;This starts the mic recording inside an onClick method, and by commenting/uncommenting one of the 2 'running' variable values I can switch between filter or no filter (android or tarsos functions) when the startRecording method is called

Java:

if(crowdFilter && running==0 && set==0){//crowd speech mode, start talking

Icons(2,"");

running=4;//start recording from mic, apply bandpass filter and save as wave file using TARSOS import

//running=5;//start recording from mic, no filter, save as wav file using android media import

freqChange = Globals.minFr[Globals.curUser];

tollerance = 40;

set=1;

startRecording();

}The start recording method:

Java:

private void startRecording() {

if (running == 5) {//start recording from mic, no filter, save as wav file using android media library

alteredRecord = new AudioRecord(MediaRecorder.AudioSource.MIC, RECORDER_SAMPLERATE, RECORDER_CHANNELS,RECORDER_AUDIO_ENCODING, bufferSize);

alteredRecord.startRecording();

isRecording = true;

recordingThread = new Thread(new Runnable() {

@Override

public void run() {

writeAudioDataToFile();

}

}, "Crowd_Speech Thread");

recordingThread.start();

}

if (running == 4) {//start recording from mic, apply bandpass filter and save as wave file using TARSOS library

dispatcher = AudioDispatcherFactory.fromDefaultMicrophone(RECORDER_SAMPLERATE, bufferSize, 0);

AudioProcessor p = new BandPass(freqChange, tollerance, RECORDER_SAMPLERATE);

dispatcher.addAudioProcessor(p);

isRecording = true;

dispatcher.run();

recordingThread = new Thread(new Runnable() {

@Override

public void run() {

writeAudioDataToFile();

}

}, "Crowd_Speech Thread");

recordingThread.start();

}

}Stop recording button inside an onClick method

Java:

if(crowdFilter && (running==4 || running==5) && set==0) {//crowd speech finished talking

Icons(1, "");

stopRecording();

set = 1;

}Both cases are fine until this point, if running==4 (tarsos dsp filter applied) the program won't compile unless the data read line is commented out. if I use running==5 (the android.media way with no filter) the whole rest of this works fine and saves the file, but no BandPass effect applied. if I try swapping out the alteredRecord = new AudioRecord... with the tarsos dispatcher = AudioDispatcherFactory... ( such as dispatcher = new AudioRecord...) they are incompatible and will not even think of compiling. (That's why line 15 in the following method is commented out)

Java:

private void writeAudioDataToFile(){

byte data[] = new byte[bufferSize];

String filename = getTempFilename();

FileOutputStream os = null;

try {

os = new FileOutputStream(filename);

} catch (FileNotFoundException e) {

e.printStackTrace();

}

int read = 0;

if(null != os){

while(isRecording){

if(running==4)

{

//read = dispatcher.(data, 0, bufferSize);

}

if(running==5)

{

read = alteredRecord.read(data, 0, bufferSize);

}

if(AudioRecord.ERROR_INVALID_OPERATION != read){

try {

os.write(data);

} catch (IOException e) {

e.printStackTrace();

}

}

}

try {

os.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

private void stopRecording(){

if(null != alteredRecord) {

isRecording = false;

int i = alteredRecord.getState();

if (i == 1) {

running = 0;

alteredRecord.stop();

alteredRecord.release();

alteredRecord = null;

recordingThread = null;

}

}

if(null !=dispatcher){

isRecording = false;

running = 0;

dispatcher.stop();

recordingThread = null;

}

copyWaveFile(getTempFilename(),getFilename());

deleteTempFile();

}

private void deleteTempFile() {

File file = new File(getTempFilename());

file.delete();

}

private void copyWaveFile(String inFilename,String outFilename){

FileInputStream in = null;

FileOutputStream out = null;

long totalAudioLen = 0;

long totalDataLen = totalAudioLen + 36;

long longSampleRate = RECORDER_SAMPLERATE;

int channels = 1;

long byteRate = RECORDER_BPP * RECORDER_SAMPLERATE * channels/8;

byte[] data = new byte[bufferSize];

try {

in = new FileInputStream(inFilename);

out = new FileOutputStream(outFilename);

totalAudioLen = in.getChannel().size();

totalDataLen = totalAudioLen + 36;

WriteWaveFileHeader(out, totalAudioLen, totalDataLen,

longSampleRate, channels, byteRate);

while(in.read(data) != -1){

out.write(data);

}

in.close();

out.close();

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

}

}

private void WriteWaveFileHeader(

FileOutputStream out, long totalAudioLen,

long totalDataLen, long longSampleRate, int channels,

long byteRate) throws IOException {

byte[] header = new byte[44];

header[0] = 'R';header[1] = 'I'; header[2] = 'F';header[3] = 'F';// RIFF/WAVE header

header[4] = (byte) (totalDataLen & 0xff);

header[5] = (byte) ((totalDataLen >> 8) & 0xff);

header[6] = (byte) ((totalDataLen >> 16) & 0xff);

header[7] = (byte) ((totalDataLen >> 24) & 0xff);

header[8] = 'W';header[9] = 'A';header[10] = 'V';header[11] = 'E';header[12] = 'f';header[13] = 'm';header[14] = 't';header[15] = ' ';// 'fmt ' chunk

header[16] = 16;header[17] = 0;header[18] = 0;header[19] = 0;// 4 bytes: size of 'fmt ' chunk

header[20] = 1;header[21] = 0;header[22] = (byte) channels;header[23] = 0;// format = 1

header[24] = (byte) (longSampleRate & 0xff);header[25] = (byte) ((longSampleRate >> 8) & 0xff);header[26] = (byte) ((longSampleRate >> 16) & 0xff);

header[27] = (byte) ((longSampleRate >> 24) & 0xff);header[28] = (byte) (byteRate & 0xff);header[29] = (byte) ((byteRate >> 8) & 0xff);

header[30] = (byte) ((byteRate >> 16) & 0xff); header[31] = (byte) ((byteRate >> 24) & 0xff);

header[32] = (byte) (2 * 16 / 8);header[33] = 0;// block align

header[34] = RECORDER_BPP;header[35] = 0;header[36] = 'd';header[37] = 'a';header[38] = 't';header[39] = 'a';

header[40] = (byte) (totalAudioLen & 0xff);header[41] = (byte) ((totalAudioLen >> 8) & 0xff);header[42] = (byte) ((totalAudioLen >> 16) & 0xff);

header[43] = (byte) ((totalAudioLen >> 24) & 0xff);// bits per sample

out.write(header, 0, 44);

}

Last edited: